Face detection ios 11

Ở iOS 11, Apple đã giới thiệu 1 API mới là Vision Framework sẽ giúp detect khuôn mặt, face features, object tracking... Ở bài này, ta sẽ tìm hiểu cách thức hoạt động của face detection. Đầu tiên ta sẽ làm 1 ứng dụng lấy hình đơn giản: @IBAction func onTapTakePhoto(sender: AnyObject) { ...

Ở iOS 11, Apple đã giới thiệu 1 API mới là Vision Framework sẽ giúp detect khuôn mặt, face features, object tracking... Ở bài này, ta sẽ tìm hiểu cách thức hoạt động của face detection.

Đầu tiên ta sẽ làm 1 ứng dụng lấy hình đơn giản:

@IBAction func onTapTakePhoto(sender: AnyObject) {

self.showAlertChoosePhoto()

}

func showAlertChoosePhoto() {

let optionMenu = UIAlertController(title: nil, message: nil, preferredStyle: .actionSheet)

let takePhotoAction = UIAlertAction(title: "Take photo", style: .default, handler: {

(alert: UIAlertAction!) -> Void in

self.showImagePicker(sourceType: .camera)

})

let getFromAlbumAction = UIAlertAction(title: "From photo album", style: .default, handler: {

(alert: UIAlertAction!) -> Void in

self.showImagePicker(sourceType: .photoLibrary)

})

let cancelAction = UIAlertAction(title: "Cancel", style: .default, handler: {

(alert: UIAlertAction!) -> Void in

})

optionMenu.addAction(takePhotoAction)

optionMenu.addAction(getFromAlbumAction)

optionMenu.addAction(cancelAction)

self.present(optionMenu, animated: true, completion: nil)

}

func showImagePicker(sourceType:UIImagePickerControllerSourceType) {

if (UIImagePickerController.isSourceTypeAvailable(sourceType)) {

let imagePicker = UIImagePickerController()

imagePicker.delegate = self

imagePicker.sourceType = sourceType

imagePicker.allowsEditing = false

self.present(imagePicker, animated: true, completion: nil)

}

}

}

Ta sử dụng UIImagePickerController để lấy photo do đó ta sẽ implement protocol của nó là UIImagePickerControllerDelegate, UINavigationControllerDelegate

extension DetectImageViewController : UIImagePickerControllerDelegate, UINavigationControllerDelegate {

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String : Any]) {

if let image = info[UIImagePickerControllerOriginalImage] as? UIImage {

imageView.image = image

}

}

}

Ngoài ra ta còn phải khai báo trong Info.plist để có thể kết nối được với camera và photo library

- Privacy - Camera Usage Description: kết nối đến camera để chụp hình

- Privacy - Photo Library Usage Description: kết nối đến photo library để có thể lấy photo

Vậy là ta đã xong bước lấy image cho app

Tiếp theo, ta implement action của button process. Trước hết ta phải translate image orientation từ UIImageOrientation sang kCGImagePropertyOrientation.

@IBAction func process(_ sender: UIButton) {

var orientation:Int32 = 0

// detect image orientation, we need it to be accurate for the face detection to work

switch image.imageOrientation {

case .up:

orientation = 1

case .right:

orientation = 6

case .down:

orientation = 3

case .left:

orientation = 8

default:

orientation = 1

}

// vision

let faceLandmarksRequest = VNDetectFaceLandmarksRequest(completionHandler: self.handleFaceFeatures)

let requestHandler = VNImageRequestHandler(cgImage: image.cgImage!, orientation: orientation ,options: [:])

do {

try requestHandler.perform([faceLandmarksRequest])

} catch {

print(error)

}

}

Sau khi translate xong, ta import Vision framework và access các API của nó. Ta thêm 1 hàm sẽ được gọi khi Vision process hoàn thành:

func handleFaceFeatures(request: VNRequest, errror: Error?) {

guard let observations = request.results as? [VNFaceObservation] else {

fatalError("unexpected result type!")

}

for face in observations {

addFaceLandmarksToImage(face)

}

}

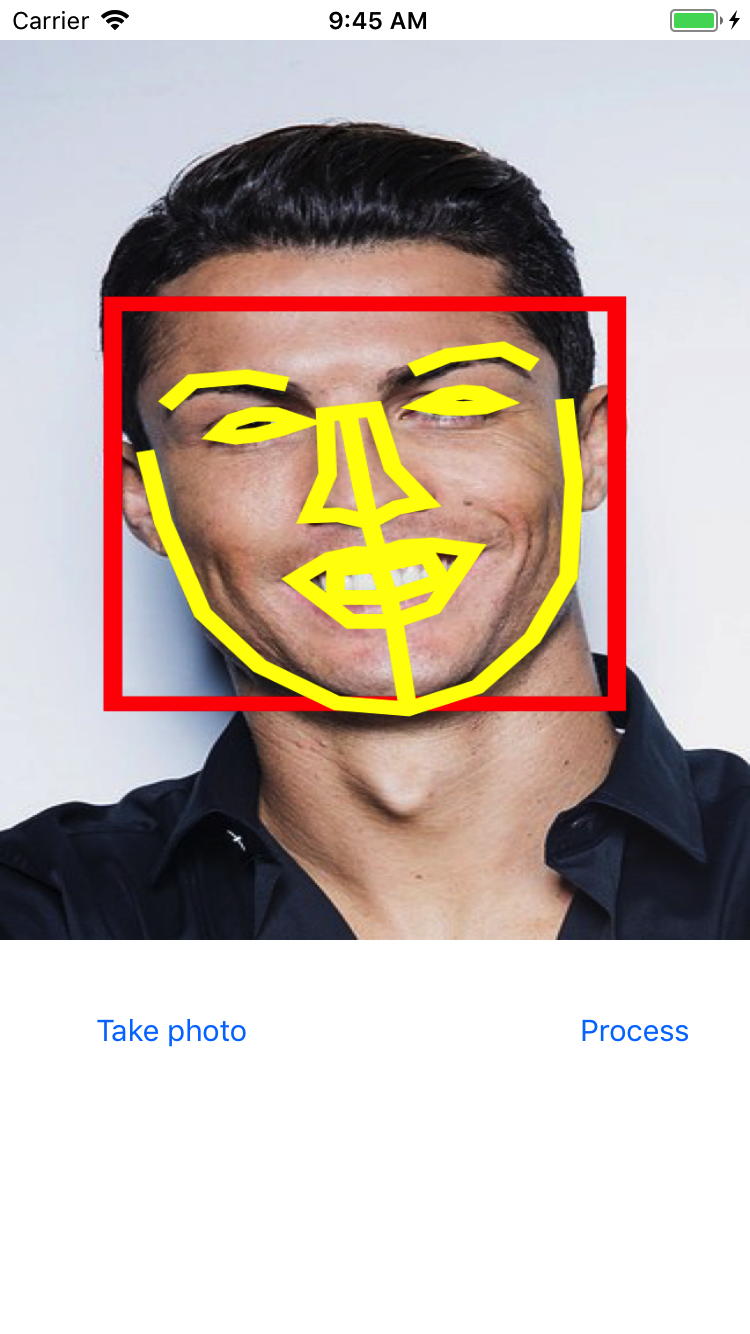

Ta cũng thêm 1 hàm khác mà thực hiện draw face dựa trên data nhận từ detect face landmarks request

func addFaceLandmarksToImage(_ face: VNFaceObservation) {

UIGraphicsBeginImageContextWithOptions(image.size, true, 0.0)

let context = UIGraphicsGetCurrentContext()

// draw the image

image.draw(in: CGRect(x: 0, y: 0, awidth: image.size.awidth, height: image.size.height))

context?.translateBy(x: 0, y: image.size.height)

context?.scaleBy(x: 1.0, y: -1.0)

// draw the face rect

let w = face.boundingBox.size.awidth * image.size.awidth

let h = face.boundingBox.size.height * image.size.height

let x = face.boundingBox.origin.x * image.size.awidth

let y = face.boundingBox.origin.y * image.size.height

let faceRect = CGRect(x: x, y: y, awidth: w, height: h)

context?.saveGState()

context?.setStrokeColor(UIColor.red.cgColor)

context?.setLineWidth(8.0)

context?.addRect(faceRect)

context?.drawPath(using: .stroke)

context?.restoreGState()

// face contour

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.faceContour {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// outer lips

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.outerLips {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.closePath()

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// inner lips

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.innerLips {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.closePath()

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// left eye

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.leftEye {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.closePath()

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// right eye

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.rightEye {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.closePath()

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// left pupil

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.leftPupil {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.closePath()

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// right pupil

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.rightPupil {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.closePath()

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// left eyebrow

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.leftEyebrow {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// right eyebrow

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.rightEyebrow {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// nose

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.nose {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.closePath()

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// nose crest

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.noseCrest {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// median line

context?.saveGState()

context?.setStrokeColor(UIColor.yellow.cgColor)

if let landmark = face.landmarks?.medianLine {

for i in 0...landmark.pointCount - 1 { // last point is 0,0

let point = landmark.point(at: i)

if i == 0 {

context?.move(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

} else {

context?.addLine(to: CGPoint(x: x + CGFloat(point.x) * w, y: y + CGFloat(point.y) * h))

}

}

}

context?.setLineWidth(8.0)

context?.drawPath(using: .stroke)

context?.saveGState()

// get the final image

let finalImage = UIGraphicsGetImageFromCurrentImageContext()

// end drawing context

UIGraphicsEndImageContext()

imageView.image = finalImage

}

Như đã thấy, ta có rất nhiều mà Vision cung cấp như face contour, mouth (cả inner và outer lips), the eyes together with the pupils và eyebrows, nose và nose crest, the median line of faces....

Build app và ta có kết quả như sau: